This defeats the purpose of having multiple neurons in the hidden layers! Instead, initialize to small, random numbers to break the symmetry. If all of the weights were one, then each hidden neuron receives the same value: the sum of all of the components of the input. To compute the input into the first hidden layer, we take the weighted sum of the weights and the input. Why don’t we just initialize all of them to be the same number like one or zero? Think back to the structure of a neural network. One additional heuristic is to initialize the parameters to small, random numbers. Remember that each layer has a different number of neurons and thus requires a different weight matrix and bias vector for each. Then we initialize our weights and biases for each layer. """Implementation of a MultilayerPerceptron (MLP)""" The exact activation function we choose is unimportant at this point since our algorithms will be generalizable to any activation function. We also define the activation function to be a sigmoid function, but we’ll discuss the details of the activation function a bit later. We’ll need to pass in the number of layers and size of each layer as a list of integers. Let’s get to some code! First, we can start by creating a class for our multilayer perceptron.

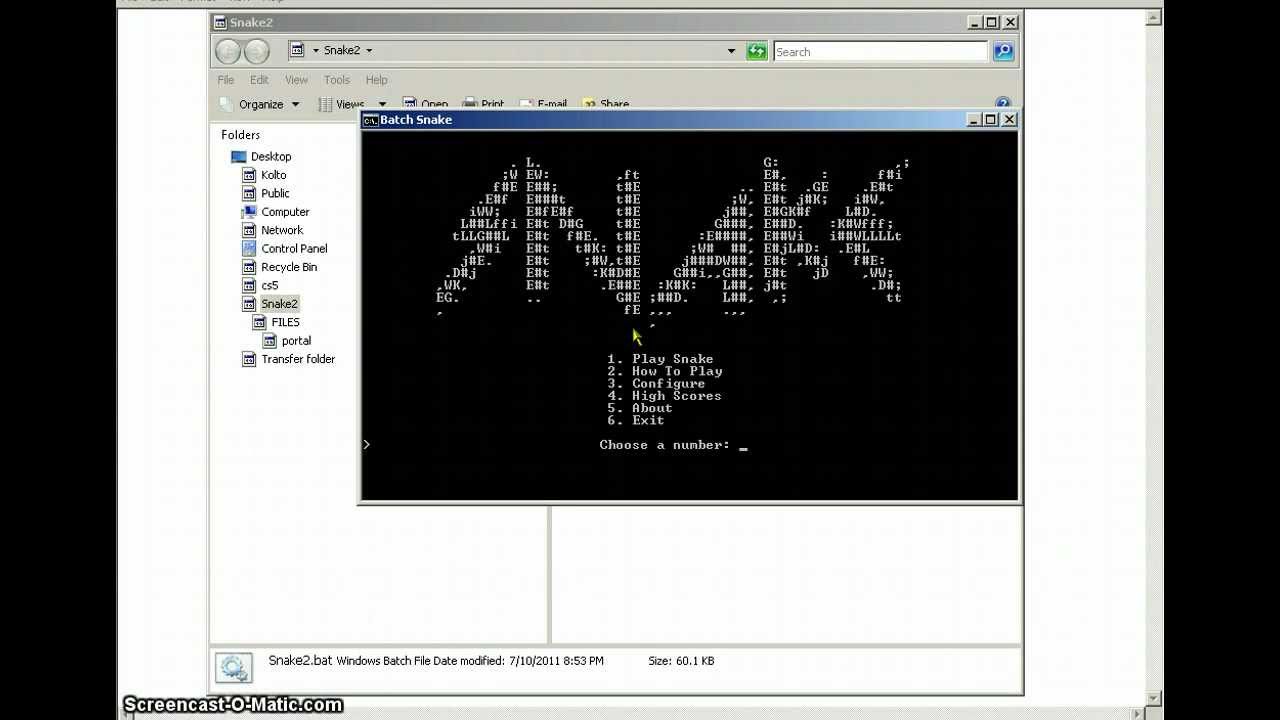

#Batch rpg game codes code

Minibatch Stochastic Gradient Descent Code To recap, stochastic gradient descent drastically improves the speed of convergence by randomly selecting a batch to represent the entire training set instead of looking through the entire training set before updating the parameters. Run through our network to and compute the average gradient, i.e., and.There will be minibatches of size where is the ceiling operator. Shuffle the training set and split into minibatches of size.We can repeat this algorithm for however many epochs we want. Here’s an algorithm that describes minibatch stochastic gradient descent. That being said, stochastic gradient descent converges, i.e., reaches the minimum (or around there), much faster than gradient descent. However, with stochastic gradient descent, we take a much noisier path because we’re taking minibatches, which cause some variation. With gradient descent, the path we take to the minimum is fairly straightforward. The goal is to get to the star, the minimum. Each bend in the line represents a point in the parameter space, i.e., a set of parameters. Think of this as a 2D representation of a 3D cost function (see Part 1’s surface plot). In this plot, the axes represent the parameters, and the ellipses are cost regions where the star is the minimum. Speaking of convergence, here’s a visual representation of (batch) gradient descent versus (minibatch) stochastic gradient descent.

If we set it small, then the network won’t converge. If we set it too large, then our network will take a longer time to train because it will be more like batch gradient descent. The batch size is another hyperparameter: we set it by hand.

The sum is over all of the samples in our minibatch of size. Mathematically, we can write it like this. This technique is called minibatch stochastic gradient descent. Then we shuffle our training data and repeat! After we’ve exhausted all of our minibatches, we say one epoch has passed.

#Batch rpg game codes update

At each minibatch, we average the gradient and update our parameters before moving on to the next minibatch. Instead, we randomly shuffle our data and divide it up into minibatches. It wouldn’t be a good use of the training set if we just randomly sampled 100 images each time. That’s a speedup factor of 600! However, we also want to make sure that we’re using all of the training data. For example, instead of running all 60,000 examples of MNIST through our network and then updating our parameters, we can randomly sample 100 examples and run that random sample through our network. Instead, we can take a randomly-sampled subset of our training data to represent the entire training set. But if we have a large training set, we still have to wait to run all inputs before we can update our parameters, and this can take a long time! Recall in vanilla gradient descent (also called batch gradient descent), we took each input in our training set, ran it through the network, computed the gradient, and summed all of the gradients for each input example.

0 kommentar(er)

0 kommentar(er)